The problem of human pose estimation, defined as the problem of localization of human joints, has enjoyed substantial attention in the computer vision community. A key step toward understanding people in images and video is accurate pose estimation.

With advances of convolutional neural network, vision problems have become easier to solve. In this article, we will directly see how we can use Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields, a CVPR paper to estimate human pose. We will use this model to extract body points for multiple subjects.

Human Pose Estimation with Machine Learning

Introduction

The problem of human pose estimation, defined as the problem of localization of human joints, has enjoyed substantial attention in the computer vision community. A key step toward understanding people in images and video is accurate pose estimation.

Given a single RGB image, we wish to determine the precise pixel location of important keypoints of the body. Achieving an understanding of a person’s posture and limb articulation is useful for higher level tasks like action recognition, and also serves as a fundamental tool in fields such as human computer interaction and animation. Over the years, pose estimation has confronted researchers with a number of daunting challenges as a well-established issue in vision. Because of factors such as clothing and lighting, a good pose estimation system must be resilient to occlusion and extreme deformation, effective in unusual and novel poses and invariant to appearance changes. Early work uses robust image characteristics and sophisticated organized prediction to tackle these difficulties: the former is used to generate local interpretations, while the latter is used to infer a globally consistent pose. Convolutionary neural networks (ConvNets) have, however, massively reshaped this modern pipeline.

Human Pose Estimation. Courtesy: Stacked Hourglass Networks for Human Pose Estimation

How to solve the problem?

With advances of convolutional neural network, vision problems have become easier to solve. In this article, we will directly see how we can use Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields, a CVPR paper to estimate human pose. We will use this model to extract body points for multiple subjects.

Using this simple library, we will be able to generate an array for any image which will give us the joint location for each subject.

After downloading the pre-trained weight, clone the github repository. Install all the requirements pip install -r requirements.txt

- Import all the necessary modules.

# import all the necessary modules

import keras

from keras.models import Sequential

from keras.models import Model

from keras.layers import Input, Dense, Activation, Lambda

from keras.layers.convolutional import Conv2D

from keras.layers.pooling import MaxPooling2D

from keras.layers.normalization import BatchNormalization

from keras.layers.merge import Concatenate

import scipy

import math

- Import the pose estimation model and processing module.

# import the pose estimation model and processing module

from PoseEstimationModel import *

from PoseEstimationProcessing import *

import cv2

import matplotlib

import pylab as plt

import numpy as np

# load a test image

test_image = 'test.jpg'

oriImg = cv2.imread(test_image) # B,G,R order

print(oriImg.shape)

plt.imshow(oriImg[:,:,[2,1,0]])

(900, 1350, 3)

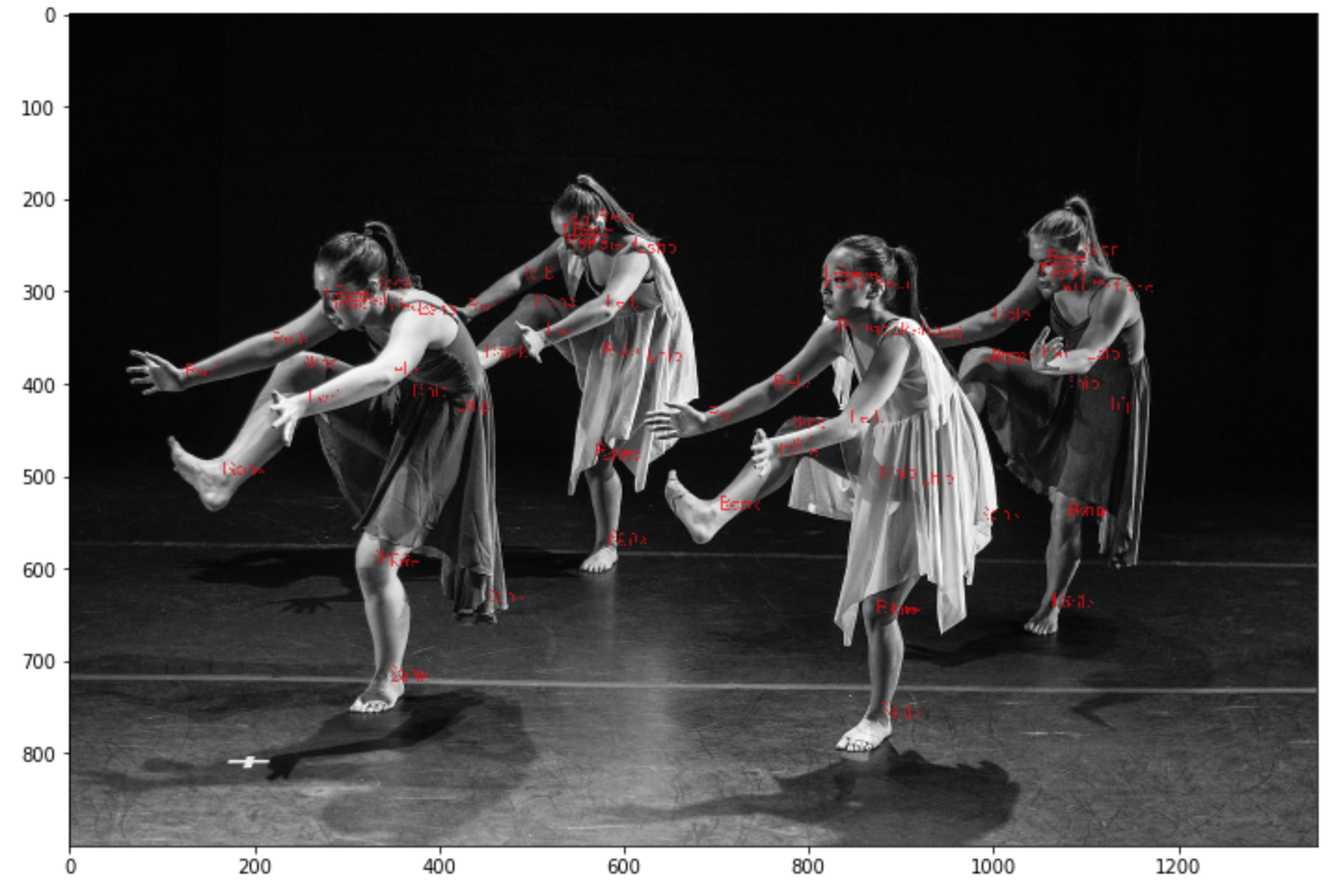

processor = PoseEstimationProcessing() # load the processor

shared_pts = processor.shared_points(pemodel, oriImg) # shared points across multiple subjects

from PlotPoints import *

plot_body_parts(test_image, shared_pts)

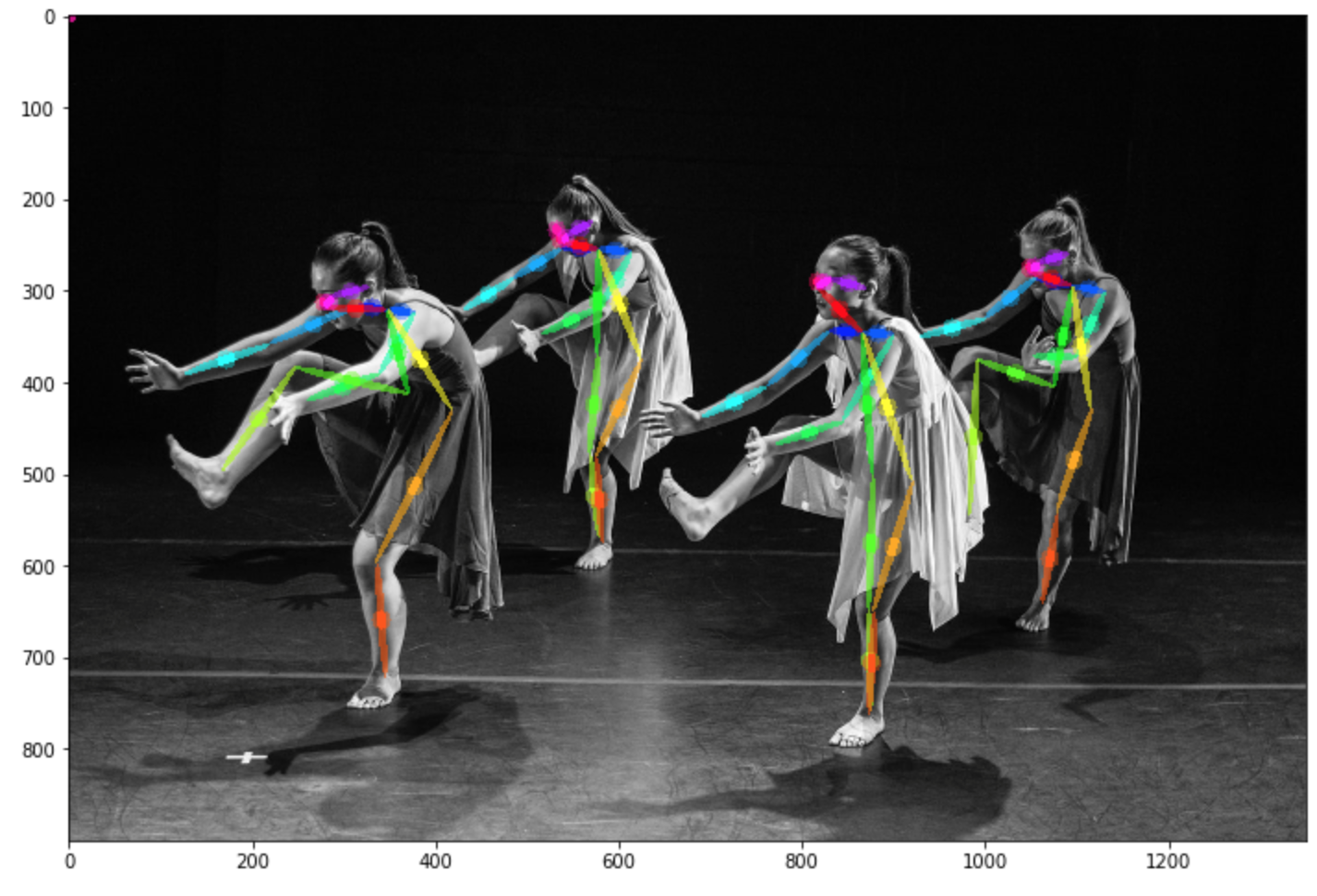

subject_wise_loc = processor.subject_points(shared_pts)

subject_wise_loc = np.array(subject_wise_loc)

Here, processor.subject_points() returns an array with shape (body parts, subject, X coordinate, Y coordinate). So, given an image it will give us the coordinates of each body joint location for all the subjects in the frame/image. Body parts mapping => [nose, neck, Rsho, Relb, Rwri, Lsho, Lelb, Lwri, Rhip, Rkne, Rank, Lhip, Lkne, Lank, Leye, Reye, Lear, Rear]. As, it's possible that there will be some joints missing for a subject. If a joint is missing for a subject the coordinates are (-1,-1).

colors = [[255, 0, 0], [255, 85, 0], [255, 170, 0], [255, 255, 0], [170, 255, 0], [85, 255, 0], [0, 255, 0], \

[0, 255, 85], [0, 255, 170], [0, 255, 255], [0, 170, 255], [0, 85, 255], [0, 0, 255], [85, 0, 255], \

[170, 0, 255], [255, 0, 255], [255, 0, 170], [255, 0, 85]]

cmap = matplotlib.cm.get_cmap('hsv')

canvas = cv2.imread(test_image)

stickwidth = 4

for i in range(len(subject_wise_loc)):

for n in range(len(subject_wise_loc[i])):

cur_canvas = canvas.copy()

Y = subject_wise_loc[i][n][1]

X = subject_wise_loc[i][n][0]

mX = np.mean(X)

mY = np.mean(Y)

length = ((X[0] - X[1]) ** 2 + (Y[0] - Y[1]) ** 2) ** 0.5

angle = math.degrees(math.atan2(X[0] - X[1], Y[0] - Y[1]))

polygon = cv2.ellipse2Poly((int(mY),int(mX)), (int(length/2), stickwidth), int(angle), 0, 360, 1)

cv2.circle(canvas, (int(mY),int(mX)), 10, colors[i], thickness=-1)

cv2.fillConvexPoly(cur_canvas, polygon, colors[i])

canvas = cv2.addWeighted(canvas, 0.4, cur_canvas, 0.6, 0)

plt.imshow(canvas[:,:,[2,1,0]])

fig = matplotlib.pyplot.gcf()

fig.set_size_inches(12, 12)

Here, we have marked each joint with a different color. The joints are also separated for each subject. This module can be implemented to extract human pose from videos. Possible applications include -

- Security

- Motion Analysis

- Sports

References:

Skeletal Graph Based Human Pose Estimation in Real-Time

Stacked Hourglass Networks for Human Pose Estimation