Ensemble learning is the method of systematically creating and integrating multiple models, such as classifiers or experts, to solve a particular problem in mathematical intelligence. Ensemble learning is primarily used to improve the performance of a model (classification, prediction, an approximation of function, etc.) or reduce the likelihood of a poor selection.

So, in bagging each model is trained on different overlapping sets and they run in parallel.

In boosting, we sequentially reduce the error of the models by focusing more on the hardest training examples. They run sequentially.

Ensemble Learning in Machine Learning

Ensemble learning is the method of systematically creating and integrating multiple models, such as classifiers or experts, to solve a particular problem in mathematical intelligence. Ensemble learning is primarily used to improve the performance of a model (classification, prediction, approximation of function, etc.) or reduce the likelihood of a poor selection. Other learning applications include assigning confidence to the model's decision, selecting optimal (or near-optimal) features, fusing data, incremental learning, non-stationary learning, and correcting errors.

An ensemble is itself an algorithm of supervised learning, because it can be trained and then used to make predictions. Therefore, the qualified ensemble is a single theory. Nevertheless, this theory is not inherently found within the domain of the concept of the models it is based on. Ensembles can therefore be shown to have greater variety in their roles. In theory, this versatility can allow them to over-fit the training data more than a single model can, but in reality, certain ensemble strategies (especially bagging) tend to reduce problems associated with over-fitting the training data.

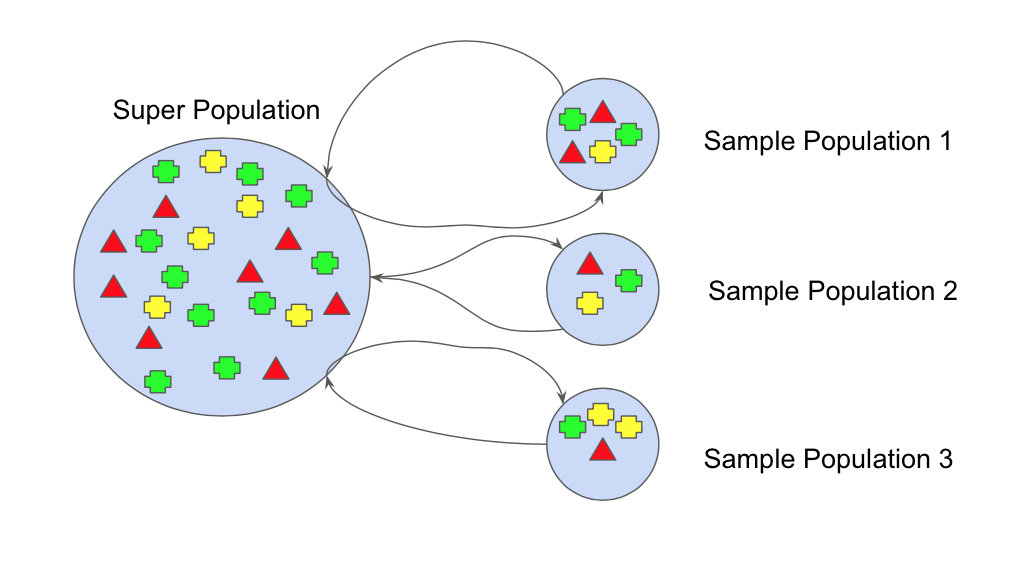

Ensemble Learning. Credit: Scholarpedia

There are many ensemble techniques. The most famous ones are -

Bagging:

Bagging is a famous ensemble learning algorithm based on boostrapping sampling algorithm. Bootstrap refers to replacement by random sampling. Bootstrap enables us to better understand the data set's bias and variance. Bootstrap requires random sampling of the dataset's small subset of data. It is possible to replace this subset. Choosing all the example in the dataset is equally likely. This method can help better understand the deviation from the dataset between mean and standand.

So, in bagging each model is trained on different overlapping sets and they run in parallel.

Boosting:

Boosting methods work in the same spirit as bagging methods: we are building a family of models that are aggregated to get a strong, better performing learner. However, unlike bagging that mainly aims to reduce variance, boosting is a technique that involves fitting sequentially multiple weak learners in a very adaptive manner: each model in the sequence is fitted to give more importance to observations in the dataset that were handled badly in the sequence by previous models.

In boosting, we sequentially reduce the error of the models by focusing more on the hardest training examples. They run sequentially.

Ensemble models are very powerful as they try to minimize the bias-variance error. For complicated problems with too much variation in data, ensemble models are very useful to avoid overfitting. These models are the winners in most AI, ML competitions (i.e. Kaggle).